EDM 2020 Tutorial: An introduction to neural networks

Recordings are available :

In this tutorial, participants explore the fundamentals of feedforward neural networks such as the backpropagation mechanism and Long Short Term Memory neural networks. The tutorial also covers the basis of Deep Knowledge Tracing, the attention mechanism and the application of neural networks in education. There will be some hands-on applications on open educational datasets. The participants should leave the tutorial with the ability to use neural networks in their research.

Description

Neural networks (NN) are as old as the relatively young history of computer science: McCullogh and Pitts already proposed nets of abstract neurons in 1943 as Haigh and Priestley report in [5]. However, their successful use, especially under the form of convolutional neural networks (CNN) or Long Short Term Memory (LSTM) neural networks, in areas such as image recognition and language translation in the last years have made them widely known, also in the Educational Data Mining (EDM) community. This is reflected in the contributions that are published each year in the proceedings of the conference.

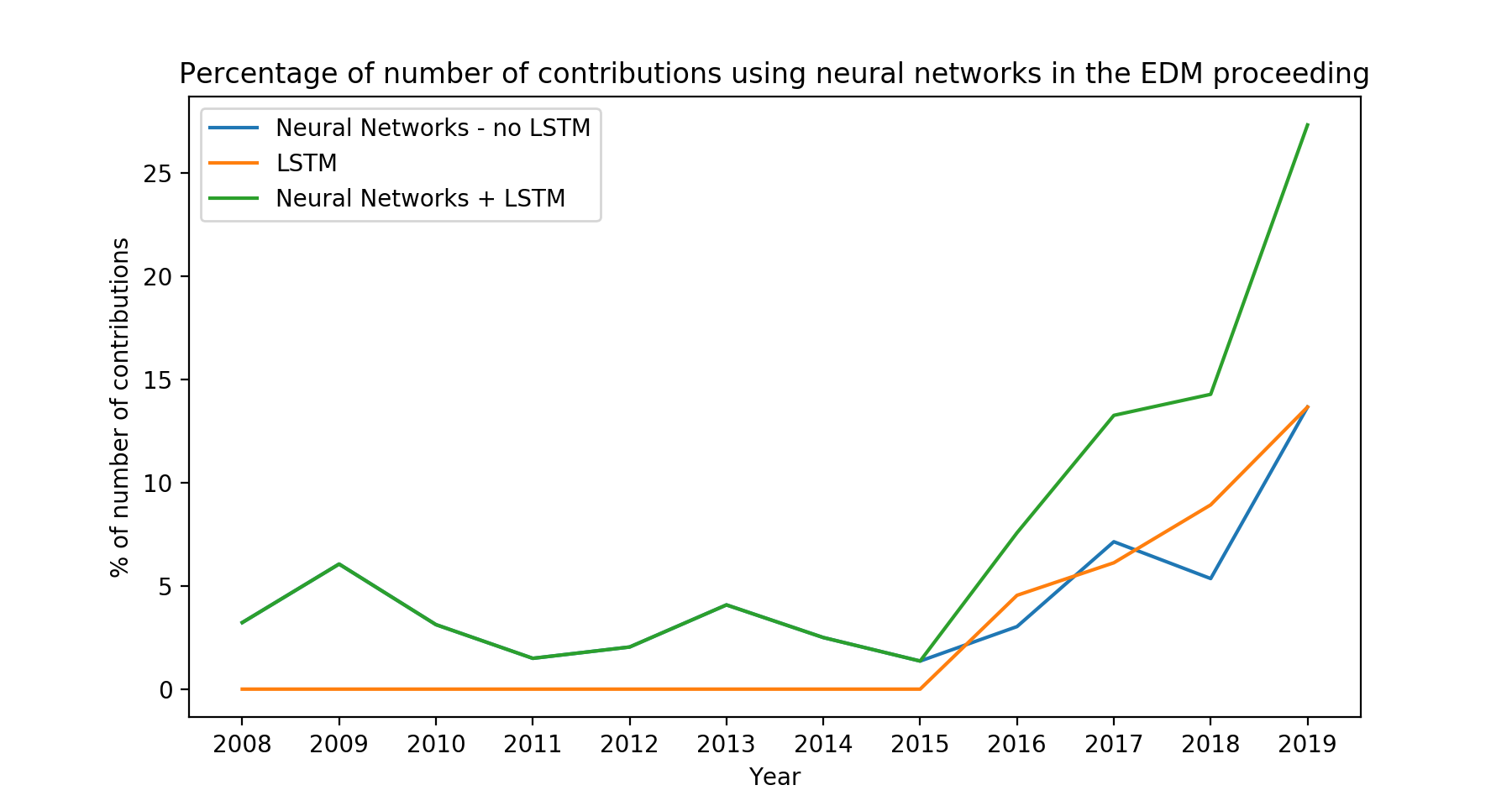

The upper green curve labeled “Neural Networks + LSTM” of this figure shows the percentage of contributions (long and short papers, posters and demos, young research track, doctoral consortium, and papers of the industry track) that have used some kind of neural networks in their research. Contributions that mention neural networks in the related works or future works only are not counted. One notices two jumps: in 2016 and 2019; the total goes from two to ten and then from 16 to 32 while the number of contributions goes from 147 to 132 and then from 112 to 139. This shows that neural networks are becoming more and more important in our field. The blue curve “Neural Networks + no LSTM” gives the percentage of the contributions that have used neural networks other than LSTM neural networks, simply called LSTM in the following, while the orange curve “LSTM” shows the percentage of papers that have used LSTM in their research (these contributions might have used LSTM and also other kinds of neural networks). Till 2015, the green curve and the blue curve overlap, as there is no contribution using LSTM.

Recognizing the growing importance of neural networks in the EDM community, this tutorial aims to provide 1) an introduction to neural networks in general and to LSTM neural networks with a focus on the attention mechanism, and 2) a discussion venue on these exciting techniques. This tutorial targets 1) participants who have no or very little prior knowledge about neural networks and would like to use them in their future work or would like to better understand the work of others, and 2) participants interested in exchanging and discussing their experience with the use of neural networks.

Learning outcome

The objectives of this tutorial are twofold:

- Introduce the fundamental concepts and algorithms of neural networks to newcomers, and then build on these fundamentals to give them some understanding of LSTM and the attention mechanism;

- provide a place to discuss and exchange about experiences while using neural networks with educational data.

Newcomers should leave the tutorial with a good understanding of neural networks and the ability to use them in their own research or to appreciate better research works that use neural networks. Participants already knowledgeable about neural networks get a chance to discuss and share about this topic and connect with others.

Schedule

| Time (UTC + 1, Morocco) | Item | Presenter |

|---|---|---|

| 1:00 - 1:15 pm | Introduction, presention | Agathe Merceron and Ange Tato |

| 1:15 - 2:00 pm | Feedforward neural networks and backpropagation | Agathe Merceron |

| 2:00 - 2:45 pm | Application - Implementation of a feed forward neural for dropout prediction - Group work and discussion | Agathe Merceron |

| 2:45 - 3:00 pm | Break | |

| 3:00 - 4:00 pm | LSTM and Attention Mechanism | Ange Tato |

| 4:00 - 5:00pm | Application - Implementation of a LSTM for student performance prediction - Group work and discussion | Ange Tato |

Introduction to feedforward neural networks

This part begins with artificial neurons and their structure - inputs, weight, output and the activation function - and the calculations that are feasible and not feasible with one neuron only. It continues with feedforward neural networks or multi-layer perceptrons (MLP). A hands-on example taken from [7] illustrates how a feedforward neural network calculates its output. Further, this part introduces the backpropagation algorithms and makes clear what a feedforward neural network learns. Backpropagation is demonstrated with the hands-on example introduced before.

Application - Discussion - Hands-on with RapidMiner

This part discusses the use of feedforward neural networks in EDM research. These networks are often used to predict students’ performance and students at-risk of dropping out, see for example [4, 1, 15]. However, other uses emerge. For example, Ren et al. use them to model the influence on the grade of course taken by a student of all other courses that the student has co-taken [10]. The main activity of this part is for participants to create, inspect and evaluate a feedforward neural network with the free version of the tool RapidMiner Studio [9]. RapidMiner Studio is a graphical tool for Data Science which requires no programming. The tool will be introduced and participants will learn to load data, explore them and classify them with neural networks.

LSTM and Attention mechanism

In this part of the tutorial, basic concepts of LSTM are covered. We will focus on how the different elements (cell, state, etc.) of the architecture work. Participants will learn how to use an LSTM for the prediction of learners' outcomes in an educational system. Concepts such as the Deep Knowledge Tracing (DKT) will be also covered. The attention mechanism is also introduced. Participants will learn how this mechanism works and how to use it in different cases. We will explore concepts such as global and local attention in neural networks.

Application - LSTM and Attention mechanism

In this hands-on part, we will explore existing real-life applications of LSTM (especially Deep Knowledge Tracing) in education. We will also explore the combination of LSTM with Expert Knowledge (using the attention mechanism) for Predicting Socio-Moral Reasoning skills [14]. Participants will implement an LSTM with an attention mechanism for the prediction of students’ performance in a tutoring system. We will use Python especially the Keras library for coding. We will use open educational datasets (e.g. Assistments benchmark dataset).

Material

The tutorial material consists of slides (see the schedule section) and some files for the application parts. Application - Part I :

Application - Part II :- Assistments dataset

- Split file

- Jupyter Notebook 1

- Jupyter Notebook 2

- Attetion_bn.py (used in the second notebook).

- bn_data.csv

- rawData_kn.csv

Presenters

Agathe Merceron , Beuth University of Applied Sciences Berlin. Ange Tato, Université du Québec à Montréal.

To do before the tutorial

- Please add your information here.

- Download and install RapidMiner studio (you will have to create an account, it is free !).

- Download and install jupyter notebook for part 2 of this tutorial.

- Download and install python 3.7 or higher.

- Download and save this requirements file.

- In a command line, run

pip install -r requirements.txt. Please make sure python is installed and is in your PATH (environment variable).

References

- Berens, J., Schneider, K., Görtz, S., Oster, S., & Burghoff, J. 2019. Early Detection of Students at Risk—Predicting Student Dropouts Using Administrative Student Data from German Universities and Machine Learning Methods. JEDM | Journal of Educational Data Mining, 11(3), 1–41. https://doi.org/10.5281/zenodo.3594771

- Chorowski, Jan K., Dzmitry Bahdanau, Dmitriy Serdyuk, Kyunghyun Cho, and Yoshua Bengio, 2015. "Attention-based models for speech recognition." In Advances in neural information processing systems. pp. 577-585.

- Chung, J., Gulcehre, C., Cho, K. and Bengio, Y., 2014. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555.

- Dekker, G.V., M. Pechenizkiy, M. and Vleeshouwers, J.M. 2012. Predicting Students Drop Out: A Case Study. In Proceedings of the 2nd International Conference on Educational Data Mining (Cordoba, Spain, July 1-3). EDM’09, 41-50.

- Haigh, T., Priestley, M.. 2020. Von Neumann Thought Turing's Universal Machine was 'Simple and Neat.': But That Didn't Tell Him How to Design a Computer. Communications of the ACM. 60, 1 (Jan. 2020), 26-32.

- Hochreiter, Sepp, and Schmidhuber, J. 1997. "Long short-term memory." Neural computation 9.8: 1735-1780.

- Mazur, M. "A Step by Step Backpropagation Example." https://mattmazur.com/2015/03/17/a-step-by-step-backpropagation-example/

- Piech, C., Bassen, J., Huang, J., Ganguli, S., Sahami, M., Guibas, L. J., & Sohl-Dickstein, J. 2015. Deep knowledge tracing. In Advances in neural information processing systems. pp. 505-513.

- RapidMiner https://rapidminer.com/products/studio/

- Ren, Z., , Ning, X., Lan, A.S., Rangwala, H. 2019. Grade Prediction Based on Cumulative Knowledge and Co-taken Courses. Proceedings of the 12th International Conference of Educational Data Mining (Montréal, Québec, Canada, July 2-5, 2019) 158-167.

- Riordan, B., Horbach, A., Cahill, A., Zesch, T., & Lee, C. 2017. Investigating neural architectures for short answer scoring. In Proceedings of the 12th Workshop on Innovative Use of NLP for Building Educational Applications. pp. 159-168.

- Romero, C., Ventura, S., Espejo, P.G, and Hervás, C. 2008. Data Mining Algorithms to Classify Students. Proceedings of the 1st International Conference of Educational Data Mining (Montréal, Québec, Canada, June 20-21, 2008) 8-17.

- Sutskever, I., Vinyals, O., & Le, Q. V. 2014. Sequence to sequence learning with neural networks. In Advances in neural information processing systems. pp. 3104-3112.

- Tato, A., Nkambou, R. and Dufresne, A. 2019. Hybrid Deep Neural Networks to Predict Socio-Moral Reasoning skills. Proceedings of the 12th International Conference on Educational DataMining (EDM’19). pp. 623-626

- Wagner, K., Merceron, A., & Sauer, P. 2020. Accuracy of a cross-program model for dropout prediction in higher education. In Workshop Addressing Drop-Out Rates in Higher Education ADORE’2020, co-located with the 10th International Learning Analytics and Knowledge Conference, Frankfurt, Germany. Companion Proceedings, 744-749.

- Wang, L., Sy, A., Liu, L. and Piech, C. 2017. "Deep knowledge tracing on programming exercises." In Proceedings of the Fourth (2017) ACM Conference on Learning@ Scale, pp. 201-204.

- Xiong, X., Zhao, S., Van Inwegen, E. G., & Beck, J. E. 2016. Going deeper with deep knowledge tracing. International Educational Data Mining Society.

- Zhang, H., and Litman, D. 2019. "Co-attention based neural network for source-dependent essay scoring." arXiv preprint arXiv:1908.01993.